The Conference on Neural Information Processing Systems, abbreviated as NeurIPS, is underway in San Diego! Below is a list of work from Princeton students, post-docs, research software engineers and faculty that will be showcased.

Best Paper Award:

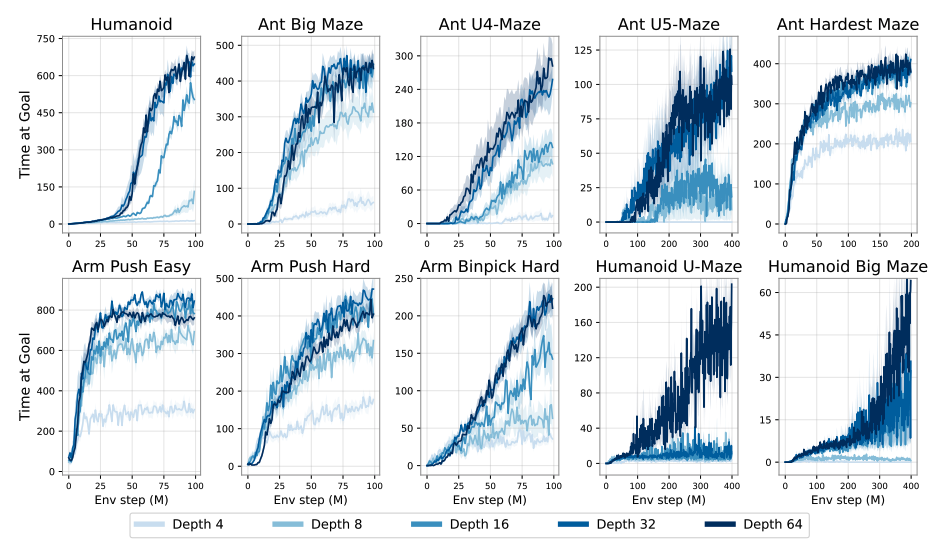

1000 Layer Networks for Self-Supervised RL: Scaling Depth Can Enable New Goal-Reaching Capabilities

Authors: Kevin Wang, Ishaan Javali, Michał Bortkiewicz, Tomasz Trzcinski, Benjamin Eysenbach

Spotlight:

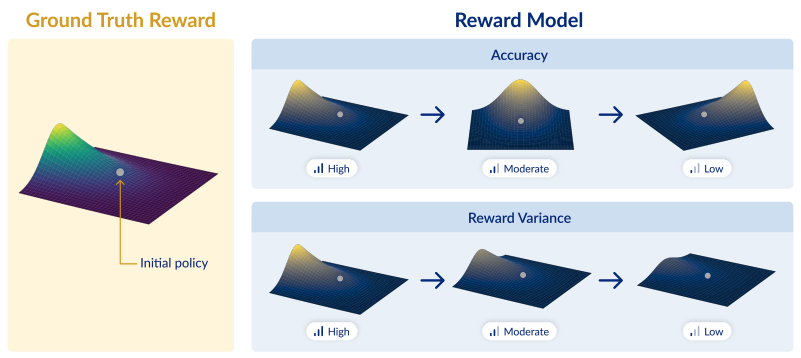

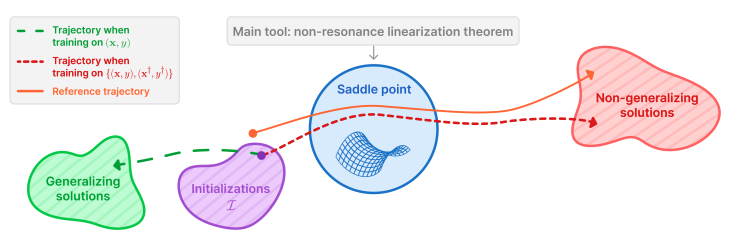

What Makes a Reward Model a Good Teacher? An Optimization Perspective

Authors: Noam Razin, Zixuan Wang, Hubert Strauss, Stanley Wei, Jason Lee, Sanjeev Arora

Spotlight:

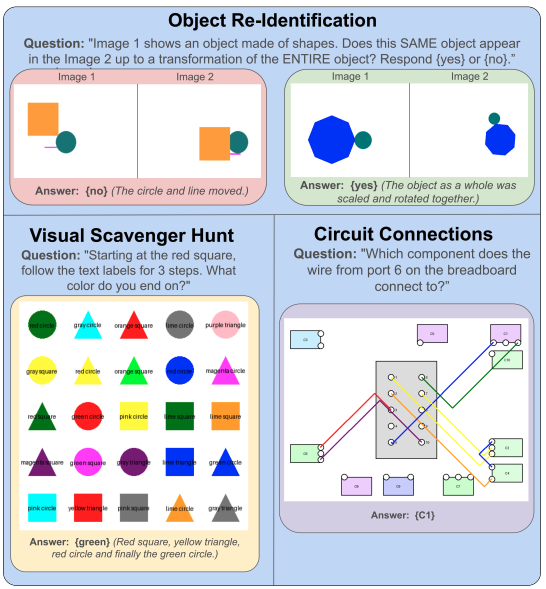

VLMs have Tunnel Vision: Evaluating Nonlocal Visual Reasoning in Leading VLMs

Authors: Shmuel Berman, Jia Deng

Spotlight:

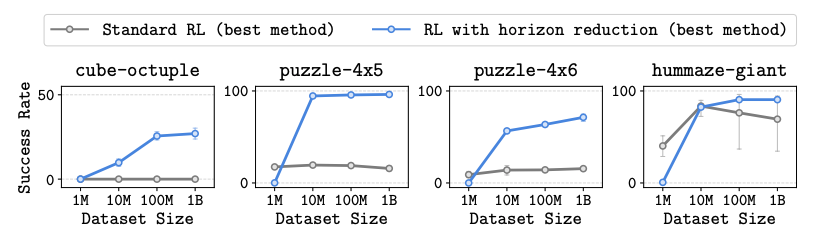

Horizon Reduction Makes RL Scalable

Authors: Seohong Park, Kevin Frans, Deepinder Mann, Benjamin Eysenbach, Aviral Kumar, Sergey Levine

Spotlight:

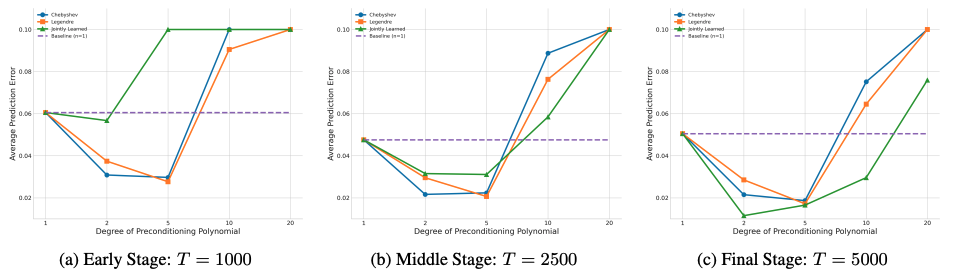

Universal Sequence Preconditioning

Authors: Annie Marsden, Elad Hazan

Spotlight:

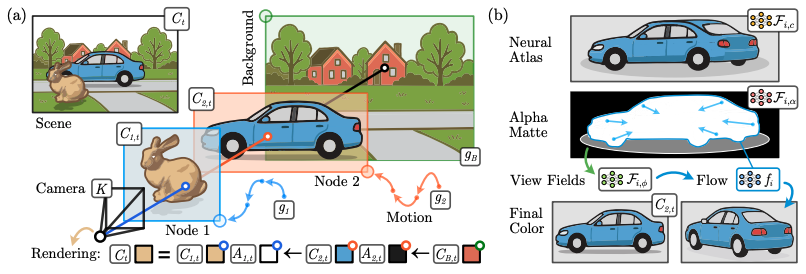

Neural Atlas Graphs for Dynamic Scene Decomposition and Editing

Authors: Jan Philipp Schneider, Pratik S. Bisht, Ilya Chugunov, Andreas Kolb, Michael Moeller, Felix Heide

Spotlight:

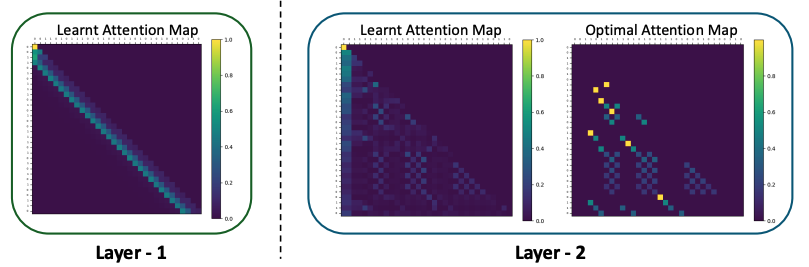

What One Cannot, Two Can: Two-Layer Transformers Provably Represent Induction Heads on Any-Order Markov Chains

Authors: Chanakya Ekbote, Ashok Vardhan Makkuva, Marco Bondaschi, Nived Rajaraman, Michael Gastpar, Jason Lee, Paul Liang

Spotlight:

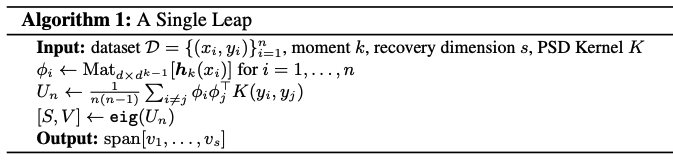

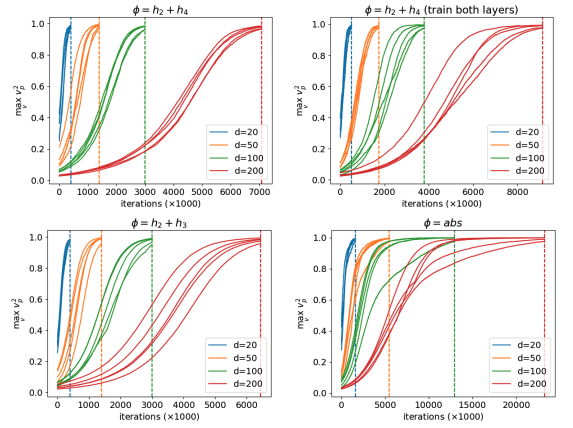

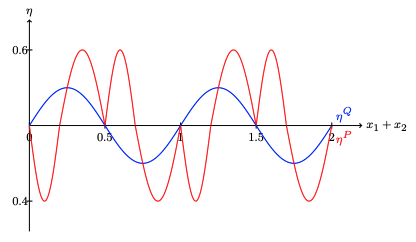

The Generative Leap: Tight Sample Complexity for Efficiently Learning Gaussian Multi-Index Models

Authors: Alex Damian, Jason Lee, Joan Bruna

Spotlight:

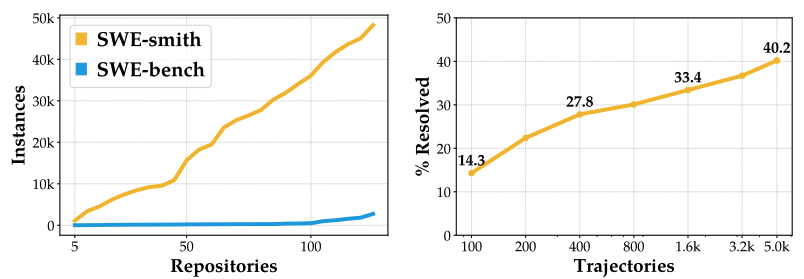

SWE-smith: Scaling Data for Software Engineering Agents

Authors: John Yang, Kilian Lieret, Carlos Jimenez, Alexander Wettig, Kabir Khandpur, Yanzhe Zhang, Binyuan Hui, Ofir Press, Ludwig Schmidt, Diyi Yang

Spotlight:

The Implicit Bias of Structured State Space Models Can Be Poisoned With Clean Labels

Authors: Yonatan Slutzky, Yotam Alexander, Noam Razin, Nadav Cohen

Spotlight:

CURE: Co-Evolving Coders and Unit Testers via Reinforcement Learning

Authors: Yinjie Wang, Ling Yang, Ye Tian, Ke Shen, Mengdi Wang

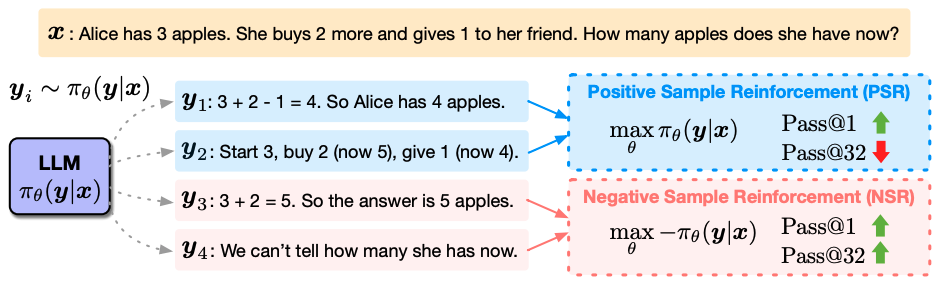

The Surprising Effectiveness of Negative Reinforcement in LLM Reasoning

Authors: Xinyu Zhu, Mengzhou Xia, Zhepei Wei, Wei-Lin Chen, Danqi Chen, Yu Meng

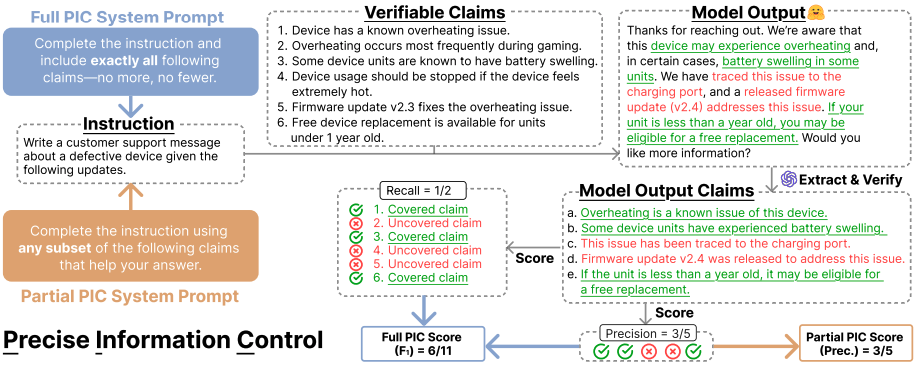

Precise Information Control in Long-Form Text Generation

Authors: Jacqueline He, Howard Yen, Margaret Li, Stella Li, Zhiyuan Zeng, Weijia Shi, Yulia Tsvetkov, Danqi Chen, Pang Wei Koh, Luke Zettlemoyer

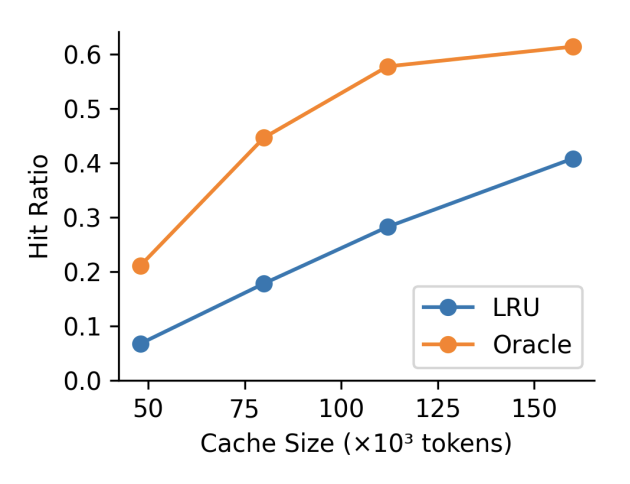

Learned Prefix Caching for Efficient LLM Inference

Authors: Dongsheng Yang, Austin Li, Kai Li, Wyatt Lloyd

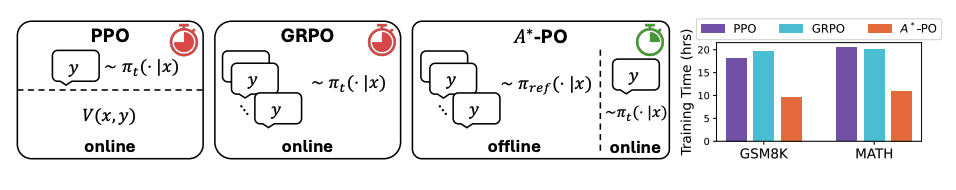

Accelerating RL for LLM Reasoning with Optimal Advantage Regression

Authors: Kianté Brantley, Mingyu Chen, Zhaolin Gao, Jason Lee, Wen Sun, Wenhao Zhan, Xuezhou Zhang

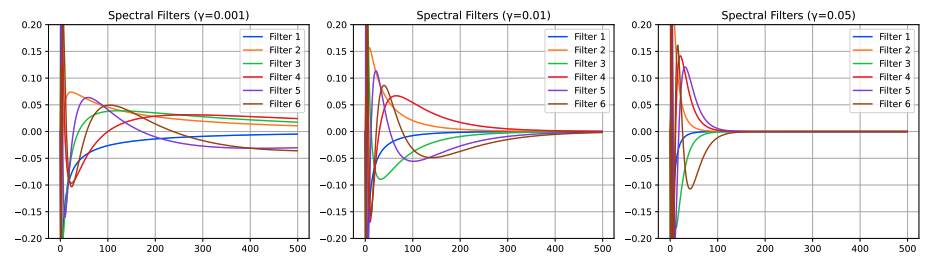

Efficient Spectral Control of Partially Observed Linear Dynamical Systems

Authors: Anand Brahmbhatt, Gon Buzaglo, Sofiia Druchyna, Elad Hazan

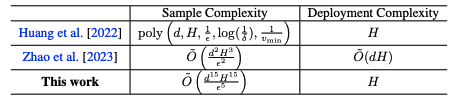

Deployment Efficient Reward-Free Exploration with Linear Function Approximation

Authors: Zihan Zhang, Yuxin Chen, Jason Lee, Simon Du, Lin Yang, Ruosong Wang

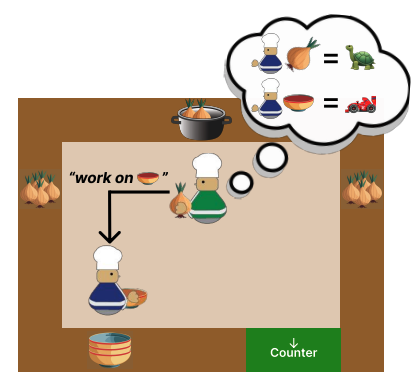

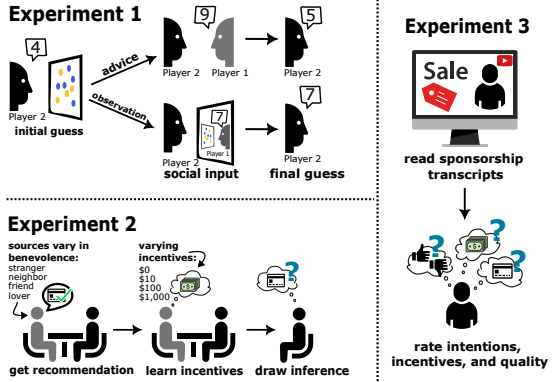

Partner Modelling Emerges in Recurrent Agents (But Only When It Matters)

Authors: Ruaridh Mon-Williams, Max Taylor-Davies, Elizabeth Mieczkowski, Natalia Vélez, Neil Bramley, Yanwei Wang, Tom Griffiths, Christopher G Lucas

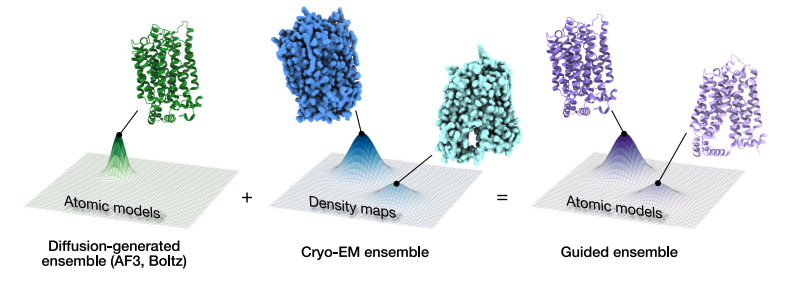

Multiscale guidance of protein structure prediction with heterogeneous cryo-EM data

Authors: Rishwanth Raghu, Axel Levy, Gordon Wetzstein, Ellen Zhong

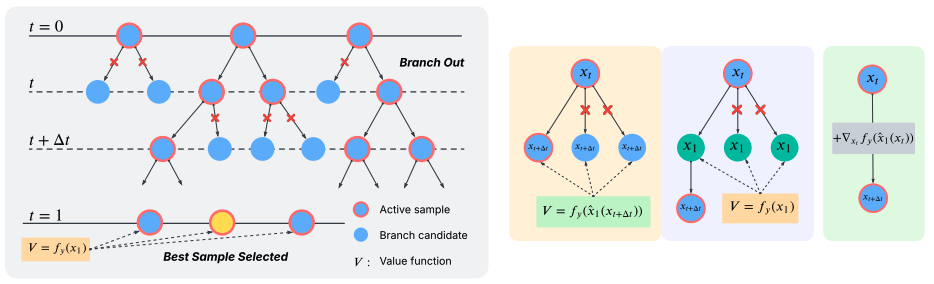

Training-Free Guidance Beyond Differentiability: Scalable Path Steering with Tree Search in Diffusion and Flow Models

Authors: Yingqing Guo, Yukang Yang, Hui Yuan, Mengdi Wang

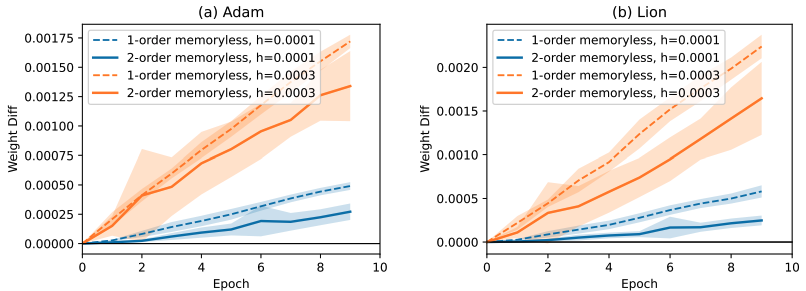

How Memory in Optimization Algorithms Implicitly Modifies the Loss

Authors: Matias Cattaneo, Boris Shigida

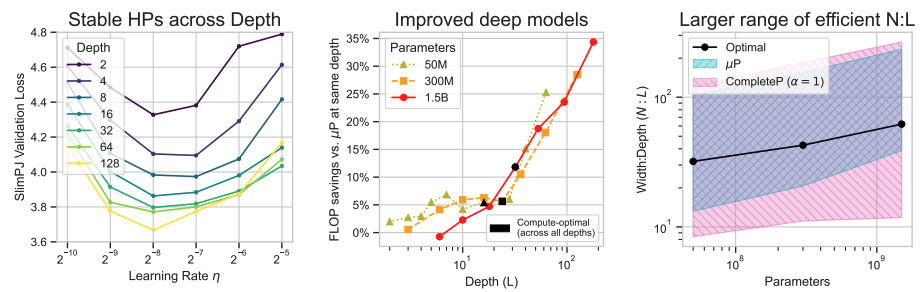

Don’t be lazy: CompleteP enables compute-efficient deep transformers

Authors: Nolan Dey, Bin Zhang, Lorenzo Noci, Mufan Li, Blake Bordelon, Shane Bergsma, Cengiz Pehlevan, Boris Hanin, Joel Hestness

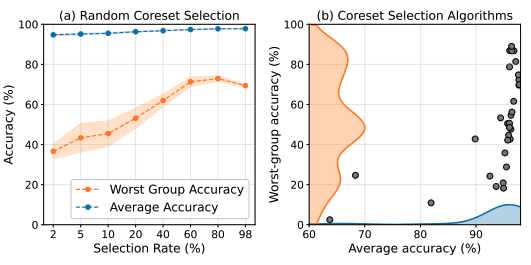

The Impact of Coreset Selection on Spurious Correlations and Group Robustness

Authors: Amaya Dharmasiri, William Yang, Polina Kirichenko, Lydia Liu, Olga Russakovsky

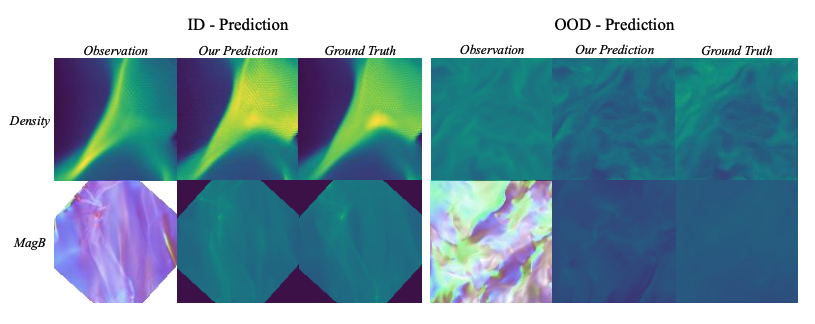

Dynamic Diffusion Schrödinger Bridge in Astrophysical Observational Inversions

Authors: Ye Zhu, Duo Xu, Zhiwei Deng, Jonathan Tan, Olga Russakovsky

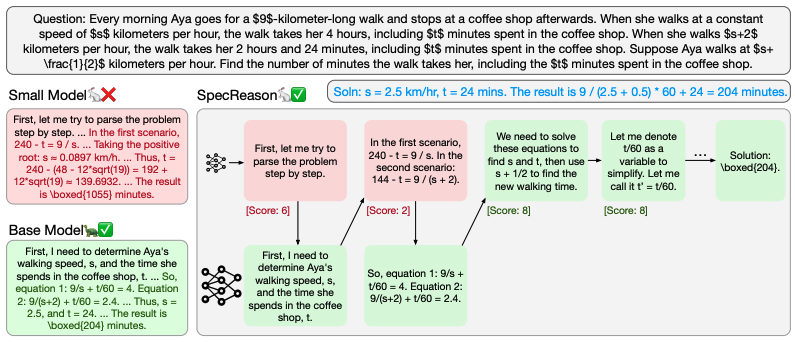

SpecReason: Fast and Accurate Inference-Time Compute via Speculative Reasoning

Authors: Rui Pan, Yinwei Dai, Zhihao Zhang, Gabriele Oliaro, Zhihao Jia, Ravi Netravali

LiveCodeBench Pro: How Do Olympiad Medalists Judge LLMs in Competitive Programming?

Authors: Zihan Zheng, Zerui Cheng, Zeyu Shen, Shang Zhou, Kaiyuan Liu, Hansen He, Dongruixuan Li, Stanley Wei, Hangyi Hao, Jianzhu Yao, Peiyao Sheng, Zixuan Wang, Wenhao Chai, Aleksandra Korolova, Peter Henderson, Sanjeev Arora, Pramod Viswanath, Jingbo Shang, Saining Xie

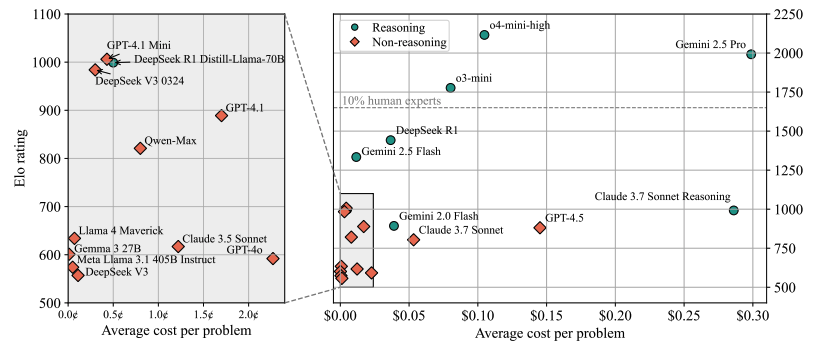

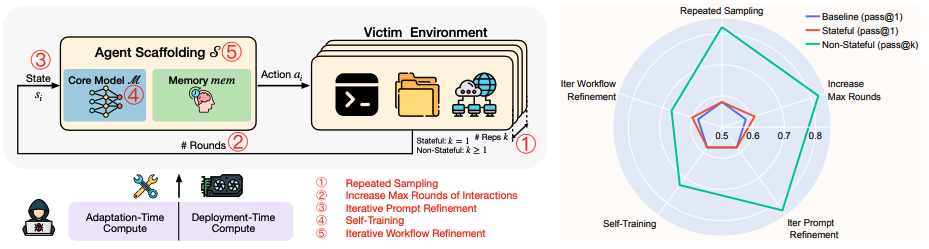

Dynamic Risk Assessments for Offensive Cybersecurity Agents

Authors: Boyi Wei, Benedikt Stroebl, Jiacen Xu, Joie Zhang, Zhou Li, Peter Henderson

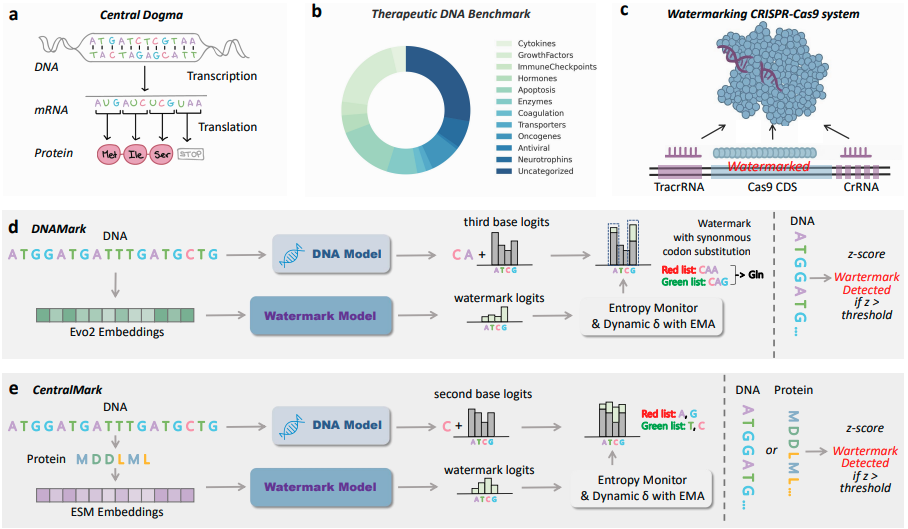

Securing the Language of Life: Inheritable Watermarks from DNA Language Models to Proteins

Authors: Zaixi Zhang, Ruofan Jin, Le Cong, Mengdi Wang

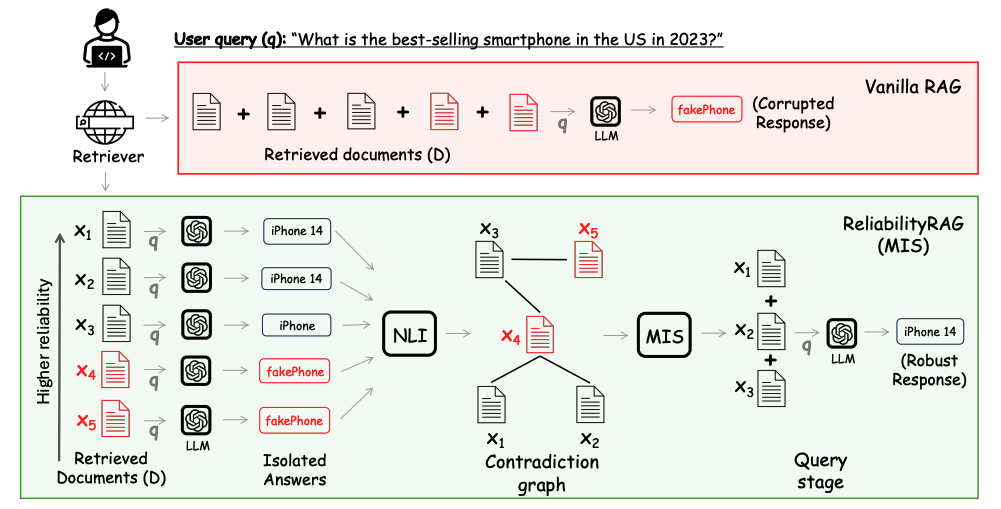

ReliabilityRAG: Effective and Provably Robust Defense for RAG-based Web-Search

Authors: Zeyu Shen, Basileal Imana, Tong Wu, Chong Xiang, Prateek Mittal, Aleksandra Korolova

InFlux: A Benchmark for Self-Calibration of Dynamic Intrinsics of Video Cameras

Authors: Erich Liang, Roma Bhattacharjee, Sreemanti Dey, Rafael Moschopoulos, Caitlin Wang, Michel Liao, Grace Tan, Andrew Wang, Karhan Kayan, Stamatis Alexandropoulos, Jia Deng

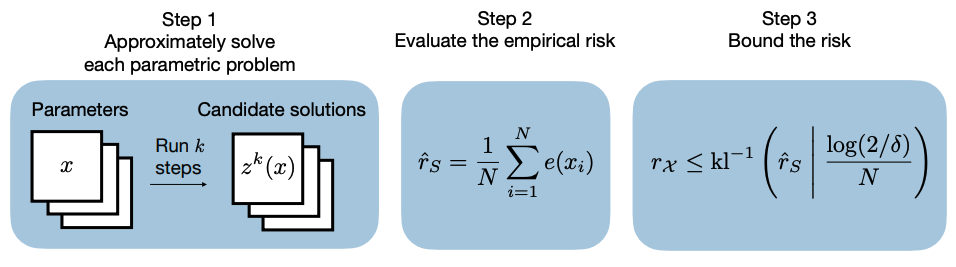

Data-Driven Performance Guarantees for Classical and Learned Optimizers

Authors: Rajiv Sambharya, Bartolomeo Stellato

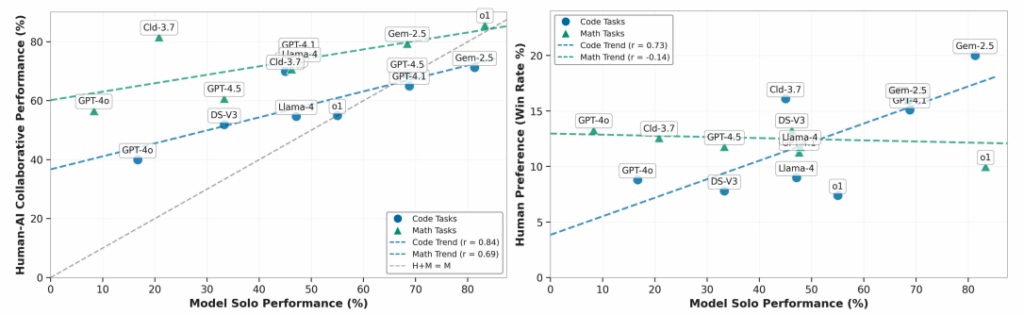

When Models Know More Than They Can Explain: Quantifying Knowledge Transfer in Human-AI Collaboration

Authors: Quan Shi, Carlos Jimenez, Shunyu Yao, Nick Haber, Diyi Yang, Karthik Narasimhan

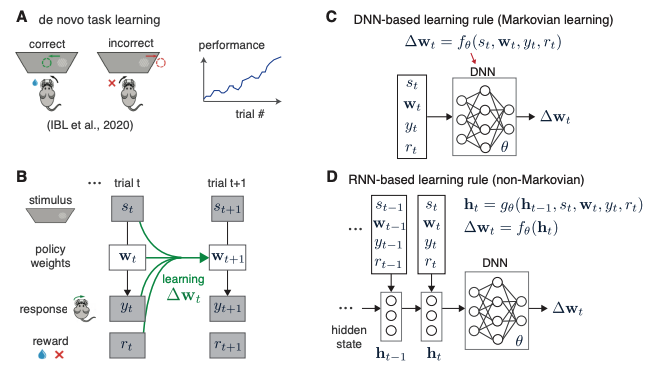

Flexible inference for animal learning rules using neural networks

Authors: Yuhan Helena Liu, Victor Geadah, Jonathan Pillow

Are Large Language Models Sensitive to the Motives Behind Communication?

Authors: Addison J. Wu, Ryan Liu, Kerem Oktar, Theodore Sumers, Tom Griffiths

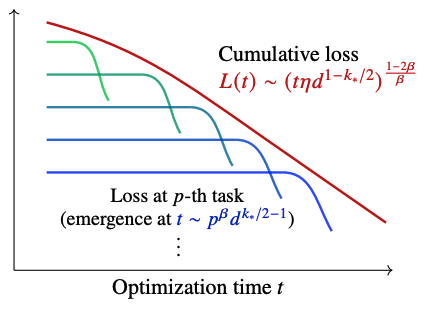

Emergence and scaling laws in SGD learning of shallow neural networks

Authors: Yunwei Ren, Eshaan Nichani, Denny Wu, Jason Lee

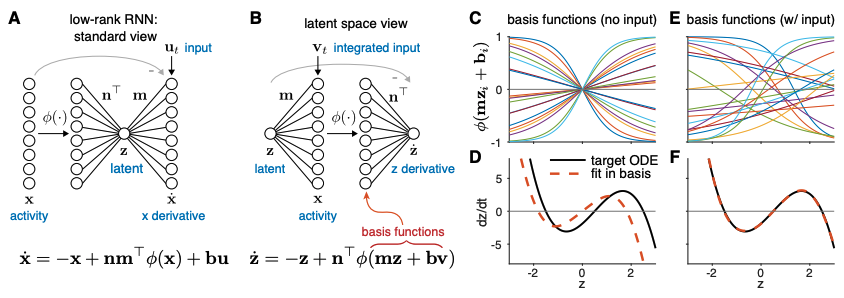

Efficient Training of Minimal and Maximal Low-Rank Recurrent Neural Networks

Authors: Anushri Arora, Jonathan Pillow

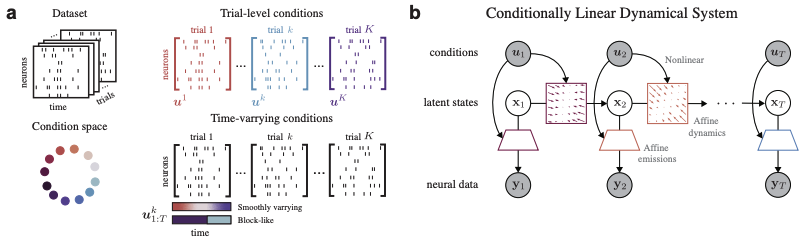

Modeling Neural Activity with Conditionally Linear Dynamical Systems

Authors: Victor Geadah, Amin Nejatbakhsh, David Lipshutz, Jonathan Pillow, Alex Williams

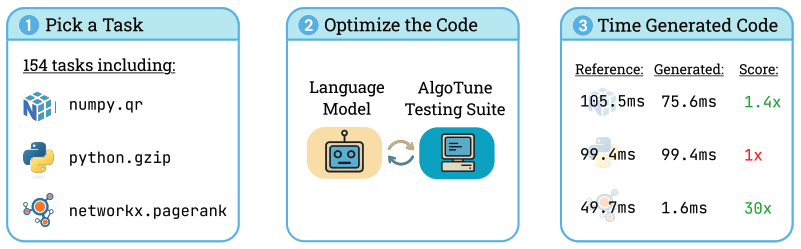

AlgoTune: Can Language Models Speed Up General-Purpose Numerical Programs?

Authors: Ori Press, Brandon Amos, Haoyu Zhao, Yikai Wu, Samuel Ainsworth, Dominik Krupke, Patrick Kidger, Touqir Sajed, Bartolomeo Stellato, Jisun Park, Nathanael Bosch, Eli Meril, Albert Steppi, Arman Zharmagambetov, Fangzhao Zhang, David Pérez-Piñeiro, Alberto Mercurio, Ni Zhan, Talor Abramovich, Kilian Lieret, Hanlin Zhang, Shirley Huang, Matthias Bethge, Ofir Press

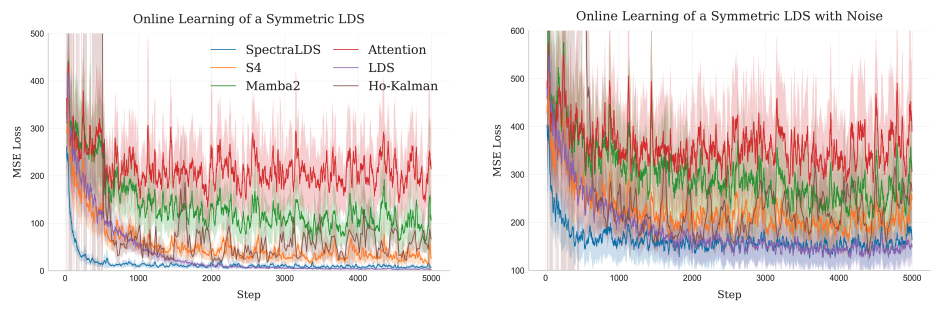

SpectraLDS: Provable Distillation for Linear Dynamical Systems

Authors: Devan Shah, Shlomo Fortgang, Sofiia Druchyna, Elad Hazan

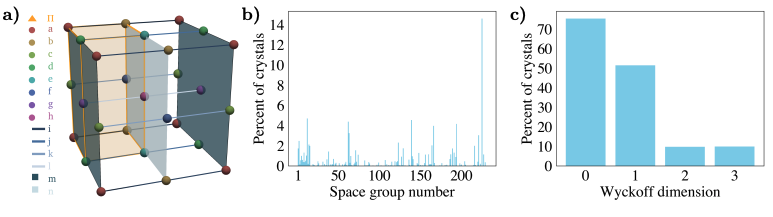

Space Group Equivariant Crystal Diffusion

Authors: Rees Chang, Angela Pak, Alex Guerra, Ni Zhan, Nick Richardson, Elif Ertekin, Ryan Adams

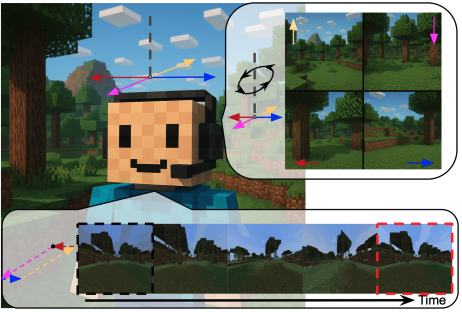

Learning World Models for Interactive Video Generation

Authors: Taiye Chen, Xun Hu, Zihan Ding, Chi Jin

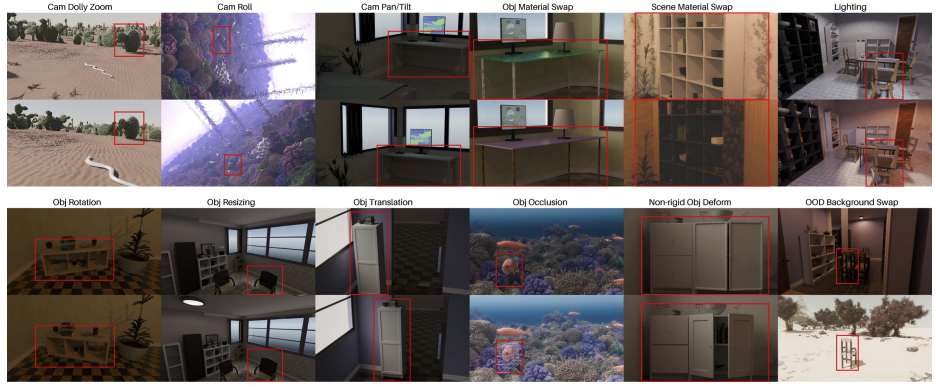

Evaluating Robustness of Monocular Depth Estimation with Procedural Scene Perturbations

Authors: Jack Nugent, Siyang Wu, Zeyu Ma, Beining Han, Meenal Parakh, Abhishek Joshi, Lingjie Mei, Alexander Raistrick, Xinyuan Li, Jia Deng

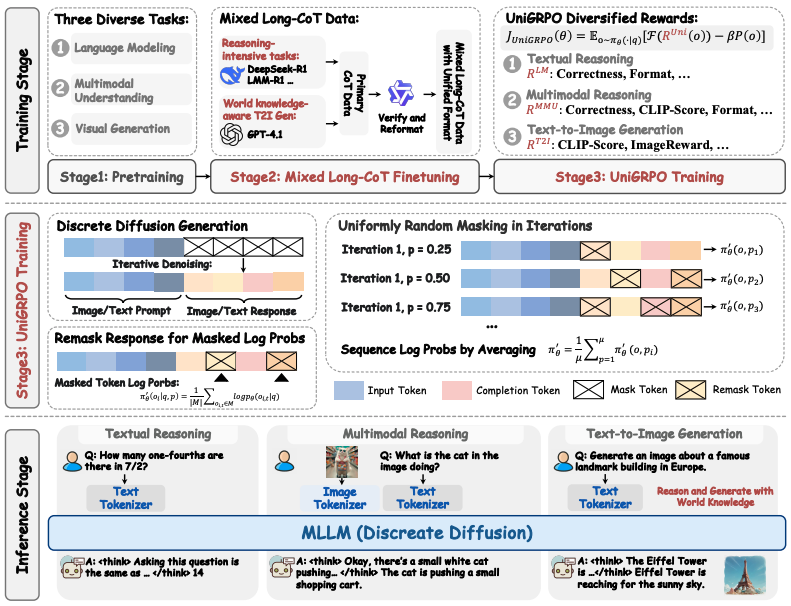

MMaDA: Multimodal Large Diffusion Language Models

Authors: Ling Yang, Ye Tian, Bowen Li, Xinchen Zhang, Ke Shen, Yunhai Tong, Mengdi Wang

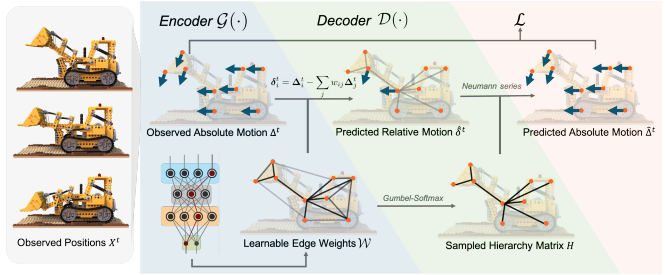

HEIR: Learning Graph-Based Motion Hierarchies

Authors: Cheng Zheng, William Koch, Baiang Li, Felix Heide

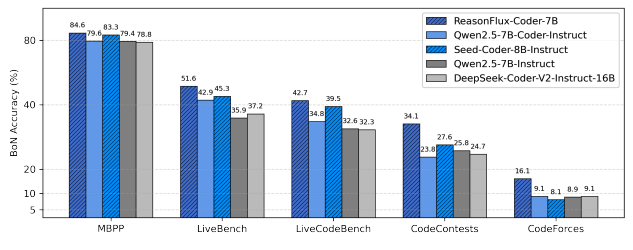

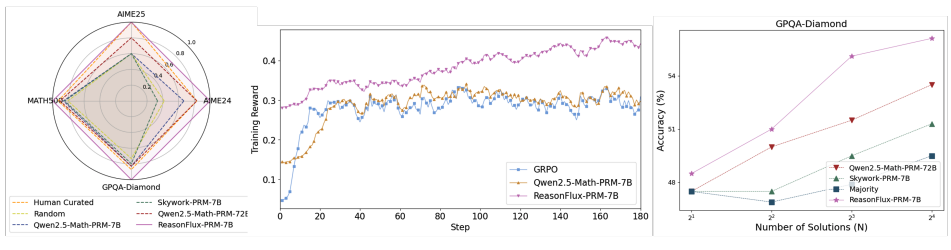

ReasonFlux-PRM: Trajectory-Aware PRMs for Long Chain-of-Thought Reasoning in LLMs

Authors: Jiaru Zou, Ling Yang, Jingwen Gu, Jiahao Qiu, Ke Shen, Jingrui He, Mengdi Wang

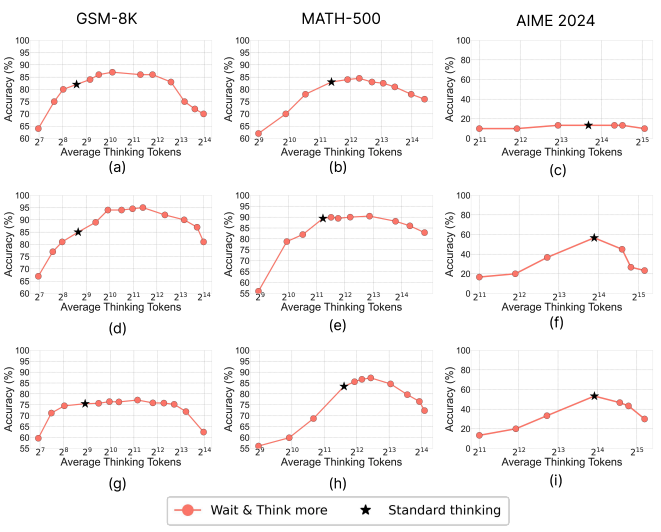

Does Thinking More Always Help? Mirage of Test-Time Scaling in Reasoning Models

Authors: Soumya Suvra Ghosal, Souradip Chakraborty, Avinash Reddy, Yifu Lu, Mengdi Wang, Dinesh Manocha, Furong Huang, Mohammad Ghavamzadeh, Amrit Singh Bedi

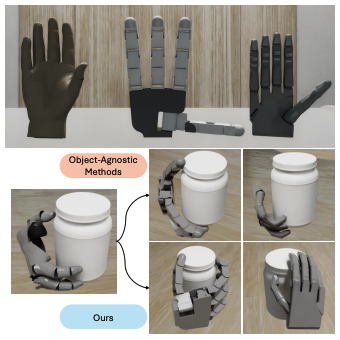

Grasp2Grasp: Vision-Based Dexterous Grasp Translation via Schrödinger Bridges

Authors: Tao Zhong, Jonah Buchanan, Christine Allen-Blanchette

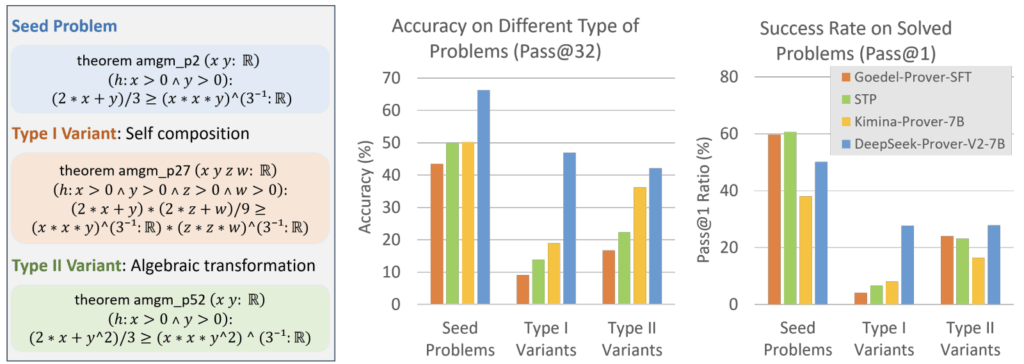

Ineq-Comp: Benchmarking Human-Intuitive Compositional Reasoning in Automated Theorem Proving of Inequalities

Authors: Haoyu Zhao, Yihan Geng, Shange Tang, Yong Lin, Bohan Lyu, Hongzhou Lin, Chi Jin, Sanjeev Arora

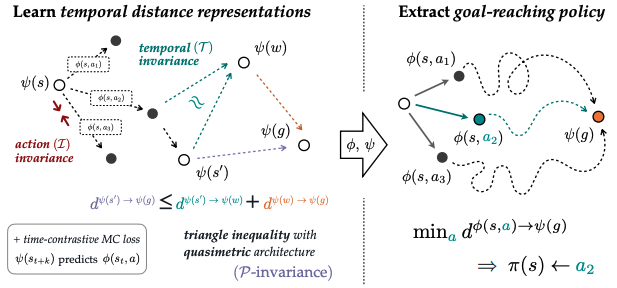

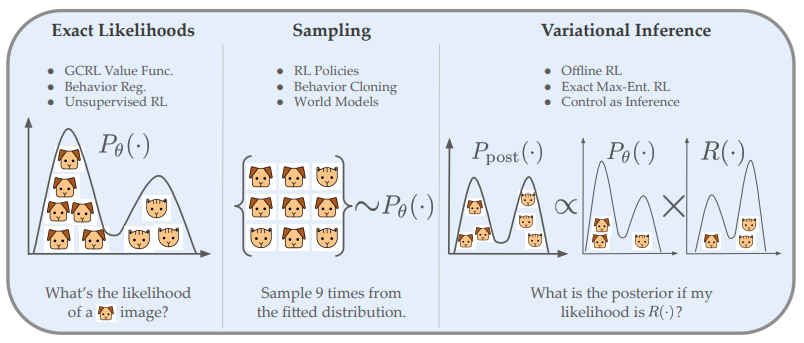

Offline Goal-conditioned Reinforcement Learning with Quasimetric Representations

Authors: Vivek Myers, Bill Zheng, Benjamin Eysenbach, Sergey Levine

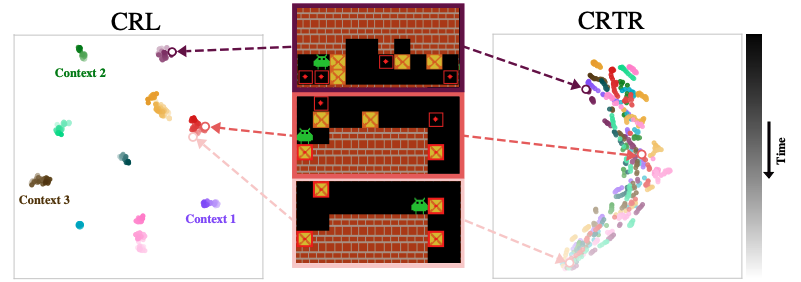

Contrastive Representations for Temporal Reasoning

Authors: Alicja Ziarko, Michał Bortkiewicz, Michał Zawalski, Benjamin Eysenbach, Piotr Miłoś

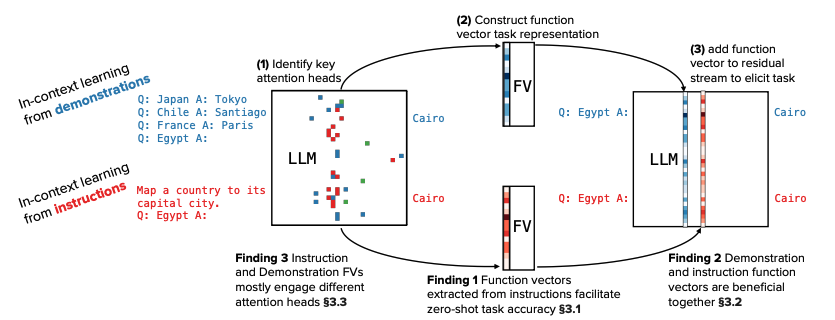

Do different prompting methods yield a common task representation in language models?

Authors: Guy Davidson, Todd Gureckis, Brenden Lake, Adina Williams

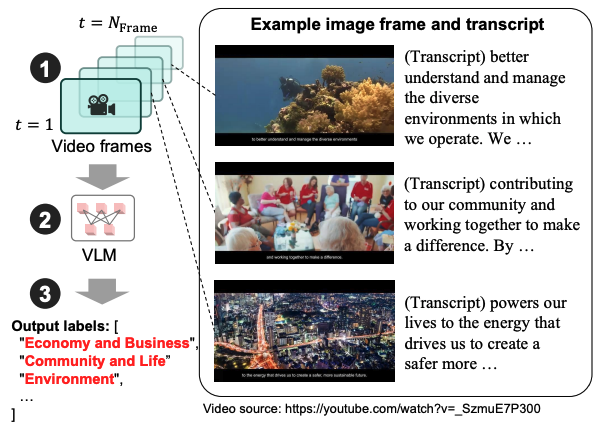

A Multimodal Benchmark for Framing of Oil & Gas Advertising and Potential Greenwashing Detection

Authors: Gaku Morio, Harri Rowlands, Dominik Stammbach, Christopher D Manning, Peter Henderson

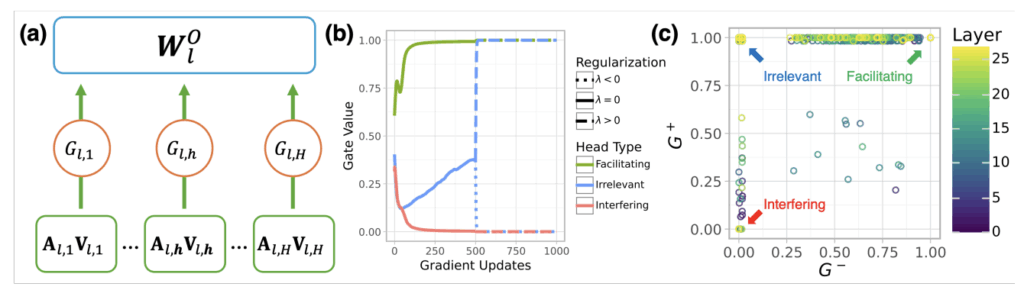

Causal Head Gating: A Framework for Interpreting Roles of Attention Heads in Transformers

Authors: Andrew Nam, Henry Conklin, Yukang Yang, Tom Griffiths, Jonathan D Cohen, Sarah-Jane Leslie

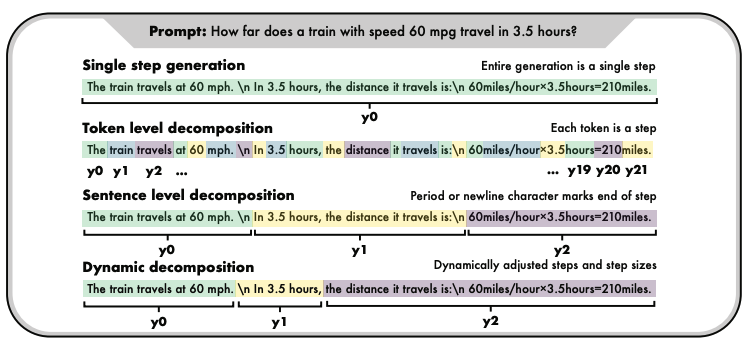

DISC: Dynamic Decomposition Improves LLM Inference Scaling

Authors: Jonathan Li, Wei Cheng, Benjamin Riviere, Yue Wu, Masafumi Oyamada, Mengdi Wang, Yisong Yue, Santiago Paternain, Haifeng Chen

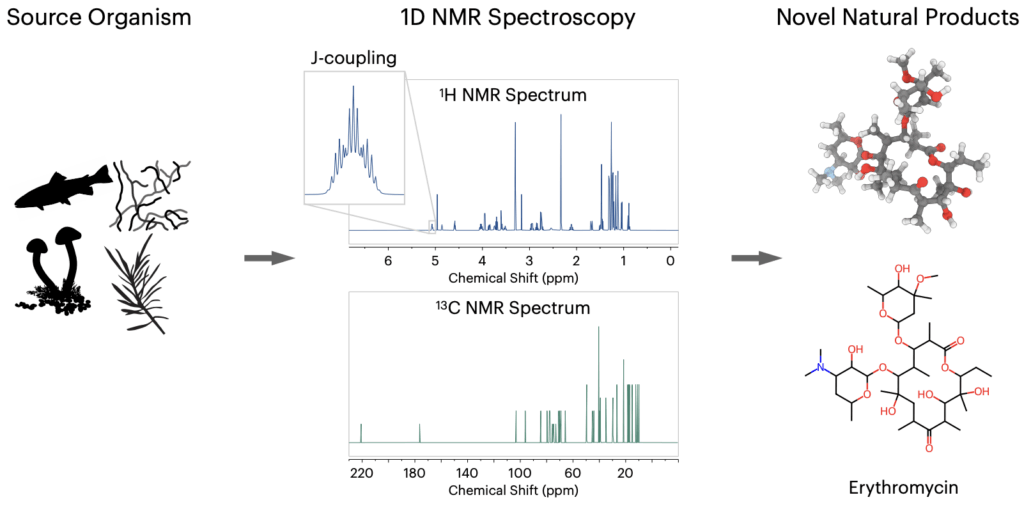

Atomic Diffusion Models for Small Molecule Structure Elucidation from NMR Spectra

Authors: Ziyu Xiong, Yichi Zhang, Foyez Alauddin, Chu Xin Cheng, Joon An, Mohammad Seyedsayamdost, Ellen Zhong

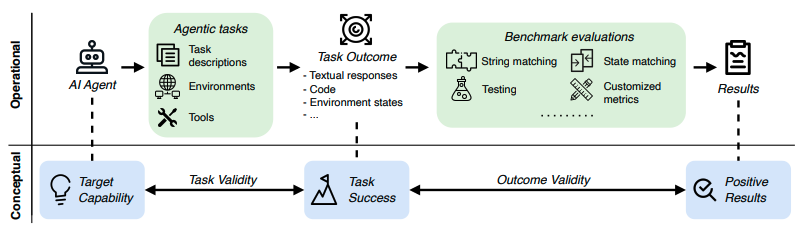

Establishing Best Practices in Building Rigorous Agentic Benchmarks

Authors: Yuxuan Zhu, Tengjun Jin, Yada Pruksachatkun, Andy Zhang, Shu Liu, Sasha Cui, Sayash Kapoor, Shayne Longpre, Kevin Meng, Rebecca Weiss, Fazl Barez, Rahul Gupta, Jwala Dhamala, Jacob Merizian, Mario Giulianelli, Harry Coppock, Cozmin Ududec, Antony Kellermann, Jasjeet Sekhon, Jacob Steinhardt, Sarah Schwettmann, Arvind Narayanan, Matei A Zaharia, Ion Stoica, Percy Liang, Daniel Kang

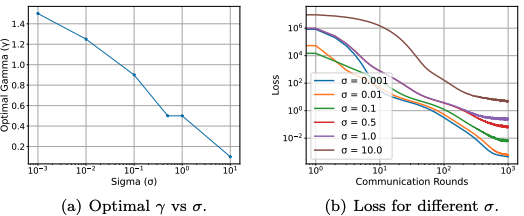

Understanding outer learning rates in Local SGD

Authors: Ahmed Khaled, Satyen Kale, Arthur Douillard, Chi Jin, Rob Fergus, Manzil Zaheer

Learning Orthogonal Multi-Index Models: A Fine-Grained Information Exponent Analysis

Authors: Yunwei Ren, Jason Lee

Robust Transfer Learning with Unreliable Source Data

Authors: Jianqing Fan, Cheng Gao, Jason Klusowski

Normalizing Flows are Capable Models for Continuous Control

Authors: Raj Ghugare, Benjamin Eysenbach